INNOVATION

The next decade will bring game-changing innovations to the automotive industry. Within our research we anticipate the needs of the market ahead of time.

RESEARCH AREAS – OVERVIEW

IN-CABIN ANALYSIS (DMS & OMS)

RESEARCH PROJECTS

UNISCOPE 3D

The UNISCOPE-3D project aims to develop highly innovative software algorithms that enable robust and accurate three-dimensional (3D) human body tracking and analysis using a single camera. By leveraging advanced computer vision techniques and Deep Learning (DL) algorithms, the software will (i) extract 3D keypoints inside and on the surface of human bodies in 3D space from single (monocular) camera images and (ii) exploit this information to generate a comprehensive understanding of complex 3D human actions, movements, and interactions in real-world environments.

This understanding of humans is essential for a wide range of industries and applications. In this project, we apply and evaluate it in emotion3D’s core business area of automotive Occupant Monitoring where it can enable advanced human-machine interaction and user experience, intelligent and personalized safety (e.g. personalized airbag deployment) and automation.

Partners: TU Vienna

Funding agency: This project is funded by the FFG within the Basisprogramm.

Roadguard

There is a growing consensus among experts that, while DMS is a valuable safety feature, it should be complemented by comprehensive, context-aware solutions and adherence to rigorous regulations to truly achieve the ambitious goals set by initiatives like the EU Vision Zero for road fatalities.

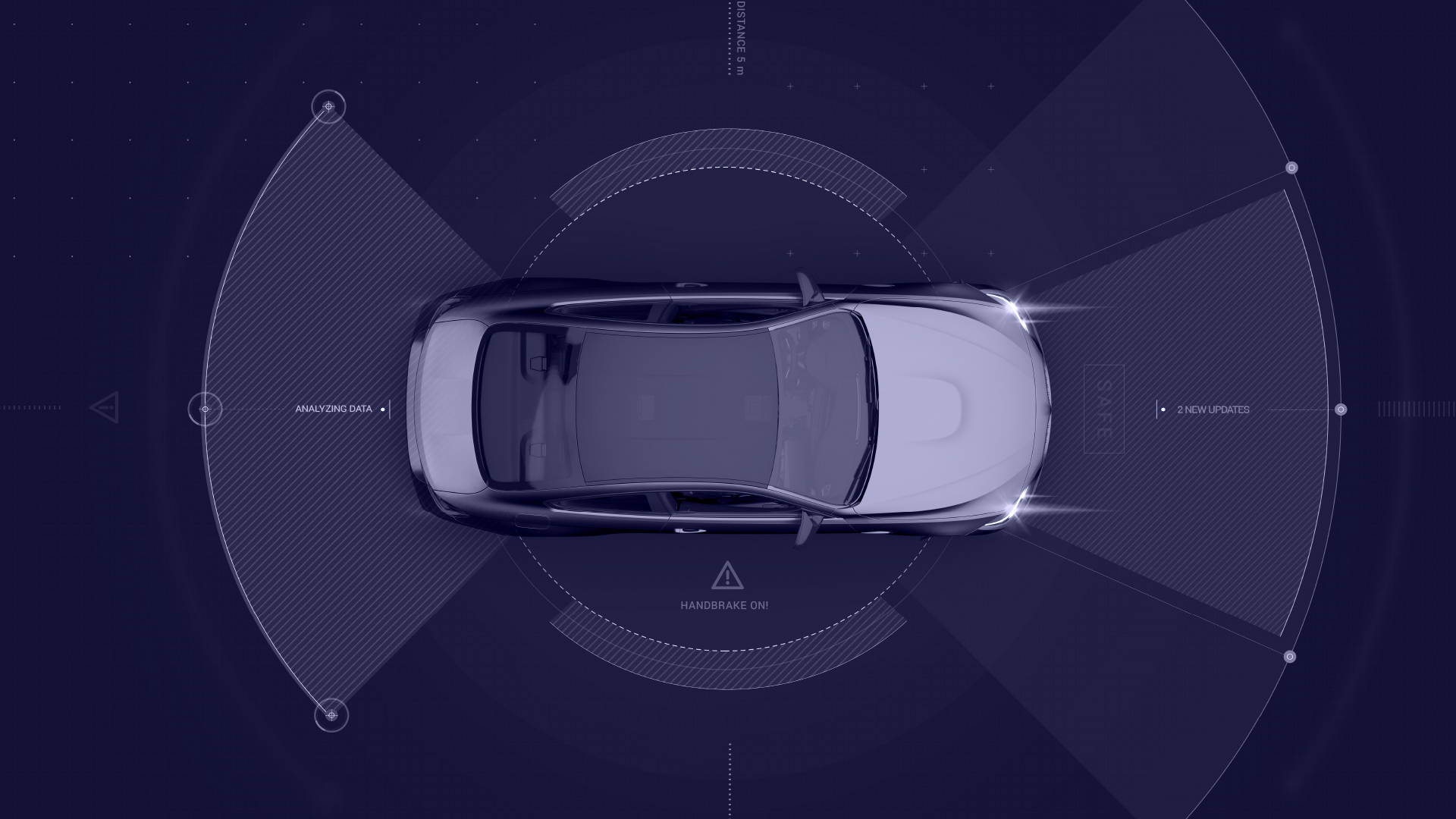

Currently, the available systems in European series vehicles are limited in their ability to detect and respond to different types of driver inattention. Traditional vehicle sensors are not yet equipped to meet the requirements of partially automated driving or contextual warning mechanisms. As a result, there is still a lot of room for improvement in terms of developing more advanced systems that can better assist drivers in various driving scenarios. More specifically, roadguard is working towards combining interior with exterior sensing capabilities.

The system utilizes advanced AI-empowered edge devices to monitor drivers and road users, setting new safety benchmarks. The project’s key contribution involves developing a holistic perception and assessment system that seamlessly integrates data from diverse sensors. This comprehensive approach addresses regulatory challenges and establishes our USP—providing unparalleled safety for all road users, especially the vulnerable, and contributing to responsible and technologically advanced mobility in the EU.

Partners: Virtual Vehicle | ZKW | leiwand.ai | motobit

Funding agency: This project is funded by the FFG within the Digital Technologies-2023 program.

MOSAIC

MOSAIC’s goal is to push the technological independence further in the landscape of automated systems by tackling the challenge of integrating diverse perception hardware configurations, ensuring that automated systems can perceive their surroundings in a non-invasive manner, avoiding a single point of failure, with unparalleled accuracy and decreased complexity.

emotion3D will contribute to the MOSAIC project by developing advanced perception technologies to enhance cognitive system intelligence for automated systems. This effort involves integrating multiple state-of-the-art sensor technologies, such as camera and radar sensors, and accompanying, sophisticated algorithms to significantly improve the system’s capacity to perceive and interpret its environment with high accuracy. This approach reduces the risk of single points of failure and contributes to the increase of the overall robustness and reliability of automated systems.

Partners: 48 European partners including Infineon | Ford Otomotiv | TTTech | TTControl | NXP | AVL

Funding agency: This project is funded by the HORIZON-JU-Chips-2024-2-RIA Program.

A-IQ Ready

Within this project, emotion3D is working towards the optimization of driver monitoring functions towards planning the driver’s work to predictively help avoiding dangerous situations.

Partners: 49 European partners including Huawei | TTTech | Synopsis | Mercedes Benz | AVL

Funding agency: This project is funded by the HORIZON-JU-Chips-2024-2-RIA Program.

More information available at https://www.aiqready.eu/

Empathic Vehicle

Partners: TU Vienna | University of Applied Sciences Technikum Vienna

Funding agency: This project is funded by Wirtschaftsagentur Vienna within the program

Within this project, emotion3D is working towards the optimization of driver monitoring functions towards planning the driver’s work to predictively help avoiding dangerous situations.

Partners: 49 European partners including Huawei | TTTech | Synopsis | Mercedes Benz | AVL

Funding agency: This project is funded by the HORIZON-JU-Chips-2024-2-RIA Program.

More information available at https://www.aiqready.eu/

IN-CABIN MONITORING FOR AUTOMATED DRIVING

Our research in this area focuses on:

- Advanced driver availability monitoring systems for Level 3

- Whole cabin safety & UX during autonomous driving

- Safety in automated shuttles

RESEARCH PROJECTS

SafePassenger3D

Read more about the project on the official site of FFG (Austrian Research Promotion Agency) by clicking here.

Funding agency: This project is funded by the FFG within the Early Stage 2019 program.

i3DOC

Partners: Mission Embedded | emotion3D

Funding agency: This project is funded by the Wirtschaftsagentur Wien as part of the funding initiative Co-Create 2017 within the FORSCHUNG program.

It is essential that this increased safety is provided for everybody, i.e. works equally well for each person who sits down in a car. Thus, the topic of trustful and ethical AI must be considered during development of the analysis algorithms. The frameworks and tools we currently develop make sure that all the safety systems work unbiased and provide optimal protection for everybody.

RESEARCH PROJECTS

Smart-RCS

Studies have shown: Any seatbelt-wearing female occupant is at 73% more risk to suffer from serious injuries than seatbelt-wearing male occupants (Univ. Virginia). Also, female occupants are at up to 17% higher risk to be killed in an accident than male occupants (NHSTA).

As long as passive safety systems cannot distinguish between the occupant’s individual characteristics, it is impossible to achieve optimal protection for everybody.

For the first time, touchless 3D imaging sensors are used to derive precise real-time information about each occupant, such as body position and pose, body physique, age, gender, etc. Based on this information, the Smart-RCS computes the optimal deployment strategy tailored to each individual occupant.

By taking those relevant factors into account, Smart-RCS optimizes the protective function while simultaneously mitigating the risks of doing unnecessary harm.

Smart-RCS aims to disrupt the passive safety systems market by introducing personalized and situation-aware protection.

Learn more on our project website: www.smart-rcs.eu

Funding agency: This project is funded by the European Commission within the Horizon 2020 FTI program.

Safe.ICM

In addition to the precise description of a complex context, this also allows a meaningful evaluation of the information. For example, accuracy can be be evaluated under several different conditions. In a further step, this evaluation or analysis of the networks enables exact statements with regard to the diversity, non-discrimination, fairness and robustness of the algorithms.

Accordingly, the goal of this project is to develop a framework for developing and optimizing trustworthy AI applications to increase the transparency, diversity, non-discrimination, fairness, and robustness of machine learning algorithms. Since traceability is essential for the use of algorithms in the automotive industry and in safety-critical environments, the framework developed in this project will be applied, tested, continuously improved and optimized using algorithms for vehicle in-cabin analysis.

Funding agency: This project has received funding from aws, by the means of the “Nationalstiftung für Forschung, Technologie und Entwicklung”.

ACCOMPLISH

ACCOMPLISH practically contributes to the current research and advance the state-of-the-art techniques and technologies across a number of research paths, including AI-based, automated assessment, recommendation and certification of compliance at different levels ranging from organisation and data/AI operations, to integrated systems/solutions and selected datasets/models; compliant-by-design and compliant-by-default (on-the-job) data/AI operations from data harvesting, retention, security, bias detection and quality assurance to AI/ML model design, training, evaluation, execution and observability/monitoring, that act as exemplary compliance technology enablers and complement the underlying data spaces.

ACCOMPLISH designs a novel AI-based compliance and certification framework cross-cutting the different regulatory/legal, environmental, cybersecurity and business/industry-specific compliance perspectives while always ensuring a human-in-the-loop approach.

Partners: 24 European partners including MAN | Ubitech | Tofas Turk Otomobil | Whirlpool | PWC

Funding agency: This project is funded by the HORIZON-CL4-2024-DATA-01-01 Program.

Within this field, we are working with large Tier-1 partners on innovative approaches to provide occupant-aware advanced driver assistance systems.

RESEARCH PROJECTS

CarVisionLight

– Aimed at strongly improved image- and therefore environment perception, the headlamps provide a tailored adaptive scenery illumination to assigned lamp-cameras.

– The stereoscopic, high resolution camera system provides significantly improved information about data related to glare free high beam control, as object classification, positioning (horizontal, vertical), distance, direction of movement, lane,

– The stereoscopic camera system enables highly improved information processing based on data relevant for autonomous driving like addressed and labelled lanes, topology, free space, obstacles, traffic participants, pedestrians, etc.

Read more about the project on the official site of TU Vienna by clicking here.

Partners: ZKW | TU Vienna | emotion3D

Funding agency: This project is funded by the FFG within the IKT der Zukunft program.

SmartProtect

Partners: ZKW | TU Vienna | emotion3D

Funding agency: This project is funded by the FFG within the MdZ-2019 program.

RESEARCH PROJECTS

SyntheticCabin

Partners: BECOM Systems | Rechenraum | TU Vienna | emotion3D

Funding agency: This project is funded by the FFG within the MdZ-2020 program.